A Misunderstood Superpower

"Compilers? Is that not something only old professors obsess over?”.

That is the stereotype. People usually think of compilers as something that belongs in the dusty corners of computer science and has no use except in academia or rarely used low-level systems work.

But that's not what I saw as a developer navigating modern software. I realized that compilers aren't just old relics, but one of the most powerful tools we have. They do not just turn your code into machine instructions, they enable performance, safety, portability, and more.

So here is the question:

What if compilers are more important today than ever before, not just behind the scenes but in the heart of everyday tools that we rely on?

I Saw the Sign

At some point, almost everyone who studies computer science dreams of building their own programming language, including me. However, for most of us, it remains a dream, something we find cool in theory but is too complex to try.

I never set out to become a compiler engineer, and I thought that such stuff was reserved for language theorists with PhDs and whiteboards filled with Greek letters.

But curiosity always kills the cat. One day, I mentioned this dream of mine to a friend, expecting a shrug or a laugh. Instead, he lit up and said, “You should check out this book —Crafting Interpreters by Robert Nystrom.”. I had never heard of it. But that recommendation was a spark.

The moment I started reading that book something clicked. The writing was clear, and the code was approachable. What started as late-night reading quickly turned into hands-on experimentation. I wasn’t just reading, I was building. A tokenizer. A parser. A virtual machine. Each step felt like uncovering a hidden layer of how programming works.

Was it overwhelming sometimes? Definitely. Bugs drove me crazy, some concepts took days to make sense, and frustrating moments almost made me gave up. But I found it strangely addictive to write code that transforms others' code.

I wasn’t aiming to create the next Python, I just wanted to learn. Along the way, I discovered how compilers guide your thinking, balance elegance with performance, and I came to appreciate the invisible machinery that runs the software.

Compilers Power the AI Boom

At this point, you may be wondering about my obsession with compilers, and why I am making a big deal out of something most developers never think about twice.

To get the answer take a step back and look at where modern computing is headed, especially with AI, you will see that compilers are everywhere. It’s easy to think of compilers as something abstract or outdated. But in the real world, especially with the AI boom, whether you believe it or not, compilers are more relevant than ever.

Every time someone runs an AI model, whether it’s an LLM, image classifier, or a recommendation engine, there’s a compiler working in the background to make it fast, efficient, and hardware-aware.

NVCC: The Compiler Foundations of Deep Learning

Take NVCC (NVIDIA's CUDA Compiler) as an example. It’s been a cornerstone of high-performance computing since the early 2010s, and it revolutionized GPU programming, making it possible to write code that executes across thousands of parallel threads.

Before the AI boom, CUDA was mainly used in scientific computing, where massive amounts of data needed to be processed in parallel. But once researchers discovered that neural networks trained on GPUs could drastically outperform CPUs —thanks to their ability to handle matrix operations in parallel— CUDA became the bedrock of modern deep learning.

Frameworks like TensorFlow, PyTorch, and Caffe started leaning heavily towards CUDA to accelerate training. But here’s the thing: CUDA’s code doesn’t write itself. Underneath every model training script you’ve seen, there’s a compiler like NVCC converting high-level tensor operations into optimized GPU kernels.

As models got more complex and hardware became more diverse, manually writing and maintaining kernel code for every ML operation and architecture became unsustainable. That’s when the idea of machine learning compilers started to take off.

The Rise of Machine Learning Compilers

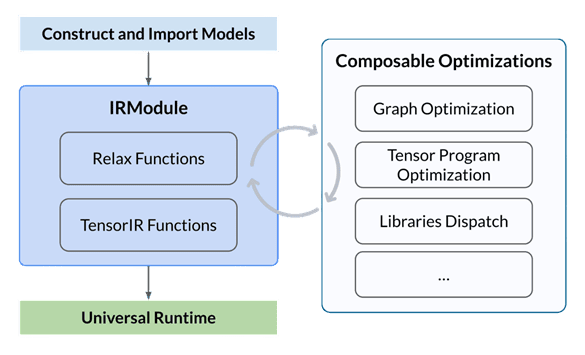

One of the major turning points was Tensor Virtual Machine (TVM), an open-source machine learning compiler developed at the University of Washington and later adopted by Apache.

TVM works this way: first, you construct a neural network model or import a pre-trained model from other frameworks (e.g., PyTorch, ONNX), and TVM takes care of the rest by transforming the model into a low-level intermediate representation (IR), then it performs a series of optimization transformations, tensor program optimization, and library dispatching. Then it builds the optimized model and executes it on different devices such as CPU, GPU, or other accelerators.

It's Not Just TVM: A Quiet Revolution in ML Compilation

While TVM was a big step forward for machine learning compilers, it wasn’t the only one. Take MLIR, an open-source project compiler infrastructure from Google. It helps developers build reusable compiler parts, making it easier to support new hardware or frameworks without starting from scratch. Today, it powers key systems like TensorFlow and ONNX.

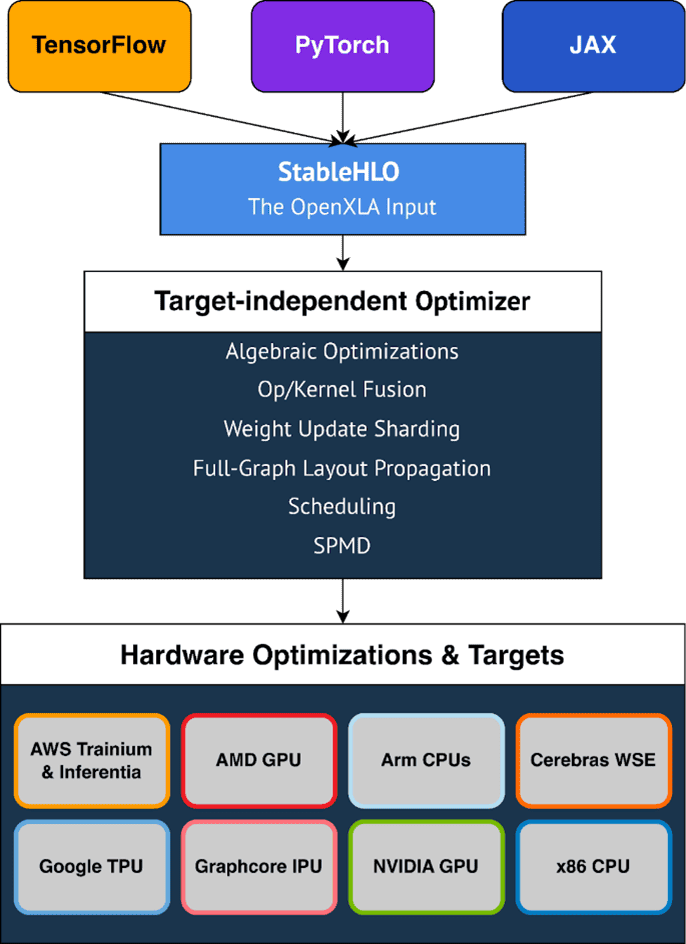

Building on that, Google also introduced OpenXLA, which combines MLIR with their earlier compiler, XLA.

XLA was great at speeding up TensorFlow by turning model operations into fast, low-level code for CPUs, GPUs, and TPUs, but it was tightly locked into TensorFlow, which made it difficult to use elsewhere.

OpenXLA changes that. It’s modular, open-source, and works across multiple frameworks like JAX and PyTorch. It also lets models run efficiently on anything from cloud TPUs to local GPUs or even custom edge devices, giving developers both performance and flexibility.

The Takeaway?

Modern AI needs hardware. Hardware needs compilers. And compilers need engineers, not just to maintain the magic, but to push it forward.

No One Wants to Be a Compiler Engineer

In most computer science programs, students take one course on compilers that's often filled with theory, automata, and parsing algorithms, then promptly move on. For many, it's a checkbox. It wouldn’t even cross some minds to become such an engineer. When the time comes to choose a career path, the vast majority gravitate toward front-end development, back-end APIs, machine learning, or data science. Few ever look back at the world of compilers.

And that might become a serious problem since modern technologies that power the apps we use and the AI models we rely on heavily depend on compiler infrastructures. Frameworks like TensorFlow, PyTorch, and even emerging standards like WebAssembly are all built on top of sophisticated compilers. They're just hidden behind clean APIs and user-friendly abstractions.

Under the hood it’s compilers all the way, transforming high-level instructions into fast, optimized code tailored for CPUs, GPUs, TPUs, and even custom chips.

Here’s the problem: very few people understand how any of that works, and even fewer are willing to build it. The number of engineers who can develop these systems is small, and the demand is growing.

If we can take the vacancies on LinkedIn as a metric, we will find hundreds of open positions at companies building AI frameworks, browsers, cloud runtimes, game engines, and hardware accelerators. Many are struggling to fill these roles.

Compilers used to be a niche topic. Now, they're everywhere, and we need people who know how to develop them. Otherwise, the infrastructure that powers everything from your language model to your phone’s browser will remain in the hands of a shrinking few.

Why You Should Build a Language (Even a Tiny One)

You don’t need to be a genius to build a programming language. In fact, building even a tiny toy language can be one of the most rewarding learning experiences in computer science.

When you build a language, you’re not just writing syntax rules, you’re diving into the core ideas that shape all of software. You’ll learn how parsers and grammars work, how semantics are designed, and how code is ultimately translated into something machines can run. You’ll think deeply about architecture, data structures, performance, and how different parts of a system interact.

It forces you to see the big picture, not just how code is written, but how it runs, how it's optimized, and what’s happening behind the scenes. More importantly, it gives you a real appreciation for the layers of abstraction we all rely on.

References

The Apache Software Foundation. (n.d.). Apache TVM Documentation. https://tvm.apache.org/docs/index.html

Wikipedia contributors. (n.d.). (2025, June 30). MLIR (software). Wikipedia. https://en.wikipedia.org/wiki/MLIR_(software)

Google Open-Source Blog. (2023, March 8). OpenXLA is available now to accelerate and simplify machine learning. https://opensource.googleblog.com/2023/03/openxla-is-ready-to-accelerate-and-simplify-ml-development.html

Robert Nystrom. (2021, July 27). Crafting Interpreters. https://craftinginterpreters.com/